Etherscan Launches AI-Powered Code Reader

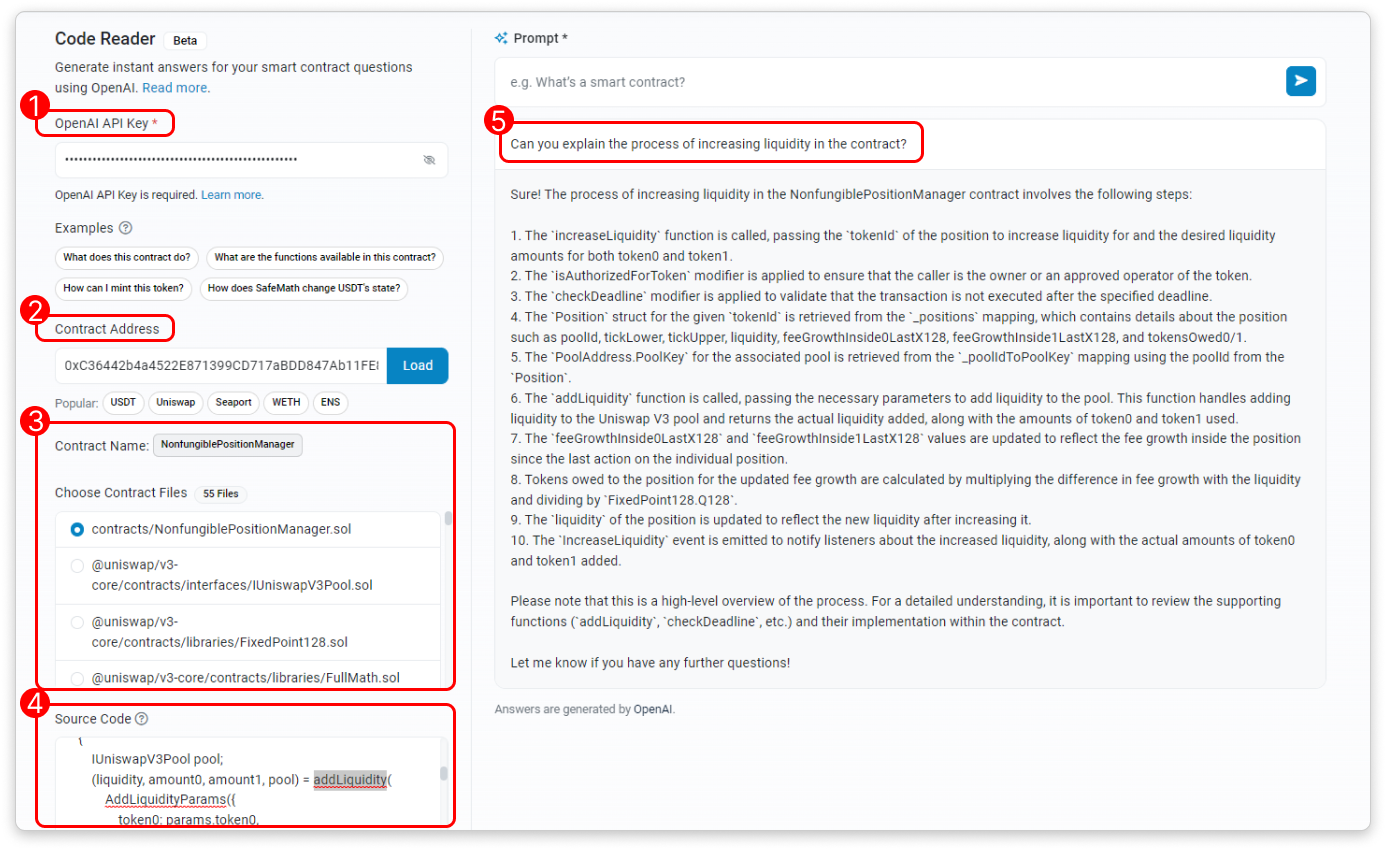

on 19 June ether scanEthereum analytics platform and block explorer, he threw a new tool called “Code Readers” which one does he use AI to retrieve and interpret the source code of a particular contract address. When the user enters a request, Code Reader generates a response that provides information about the contract’s source code files via the OpenAI language model. Yes on the tool’s tutorial page law HE:

“You need a valid OpenAI API Key and adequate OpenAI usage limits to use the tool. This tool does not store your API keys.”

Code Reader use cases include: deepen the contract rules thanks to explanations generated by artificial intelligence, Comprehensive lists of smart contract functions related to Ethereum data and understand how the core contract interacts with decentralized applications.

“Once the contract files are imported, you can select a specific source code file to read. You can also edit the source code directly within the UI before sharing it with AI”reads the page.

In the midst of the AI boom, some experts have warned of the viability of existing AI models. Second A new report published by foresight initiativesSingapore-based venture capital firm, “computing power resources will be at the center of a major battle over the next decade.” However, despite the growing demand for training large AI models in decentralized networks with distributed computing power, the researchers say current prototypes face significant limitations, such as: data synchronization, network optimization, privacy Hey security problems.

For example, Foresight researchers found that: Training a large model with 175 billion parameters and single precision floating point representation, approx. 700 gigabytes. But, Distributed training requires frequent transmission and updating of these parameters between computational nodes.. In the case of 100 compute nodes where each node must update all parameters in each unit phase, the model will require the following data to be transmitted: 70 terabytes of data per second, far exceeds the capacity of most networks. The researchers summarized:

“In most scenarios, small AI models are still a more viable choice and should not be dismissed too soon due to FOMO. [fear of missing out] in large models”.